I met Matthias Bröcheler (CTO of Aurelius the company behind titan graph) 4 years ago in a teaching situation for the German national student high school academy. It was the time when I was still more mathematician than computer scientist but my journey in becoming a computer scientist had just started. Matthias was in the middle of his PhD program and I valued his insights and experiences a lot. It was for him that my eyes got open for the first time about what big data means and how companies like facebook, google and so on knit their business model around collecting data. Anyway Matthias influenced me in quite some way and I have a lot of respect of him.

I did not start my PhD right away and we lost contact. I knew he was interested in graphs but that was about it. First when I started to use neo4j more and more I realized that Matthias was also one of the authors of the tinkerpop blueprints which are interfaces to talk to graphs which most vendors of graph data bases use. At that time I looked him up again and I realized he was working on titan graph a distributed graph data base. I found this promising looking slide deck:

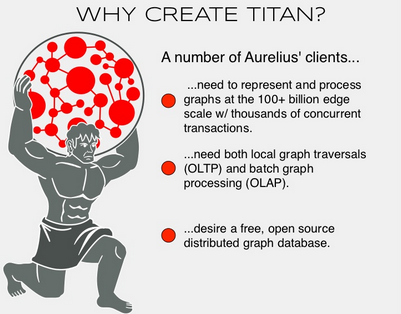

Slide 106:

Slide 107:

But at that time for me there wasn’t much evidence that Titan would really hold the promise that is given in slides 106 and 107. In fact those goals seemed as crazy and unreachable as my former PhD proposal on distributed graph databases (By the way: Reading the PhD Proposal now I am kind of amused since I did not really aim for the important and big points like Titan did.)

During the redesign phase of metalcon we started playing around with HBase to support the architecture of our like button and especially to be able to integrate this with recommendations coming from mahout. I started to realize the big fundamental differences between HBase (Implementation of Google Bigtable) and Cassandra (Implementation of Amazon Dynamo) which result from the CAP theorem about distributed systems. Looking around for information about distributed storage engines I stumbled again on titan and seeing Matthias’ talk on the Cassandra summit 2013. Around minute 21 / 22 the talk is getting really interesting. I can also suggest to skip the first 15 minutes of the talk:

Let me sum up the amazing parts of the talk:

- 2400 concurrent users against a graph cluster!

- real time!

- 16 different (non trivial queries) queries

- achieving more than 10k requests answered per second!

- graph with more than a billion nodes!

- graph partitioning is plugable

- graph schema helps indexing for queries

- Scaling data size

- scaling data access in terms of concurrent users (especially write operations) is fundamentally integrated and seems also to be successful integrated.

- making partitioning pluggable

- requiring an schema for the graph (to enable efficient indexing)

- being able on runtime to extend the schema.

- building on top of ether Cassandra (for realtime) or HBase for consistency

- being compatible with the tinkerpop techstack

- bringing up an entire framework for analytics and graph processing.

Further resources:

- https://github.com/thinkaurelius/titan

- https://github.com/renepickhardt/metalcon/wiki/Technologytitan (our wiki page in metalcon where we will collect more information about titan.)

- There is also a screencast by Marko available on how to set up titan on an amazon cluster and querying music brainz rdf data: