As I said yesterday I have been busy over the last months producing content so here you go. For related work we are most likely to use neo4j as core data base. This makes sense since we are basically building some kind of a social network. Most queries that we need to answer while offering the service or during data mining carry a friend of a friend structure.

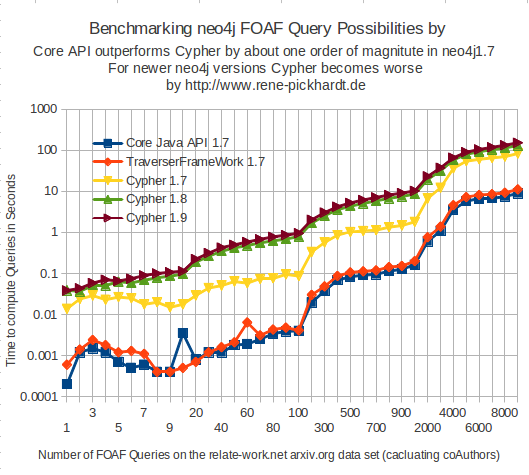

For some of the queries we are doing counting or aggregations so I was wondering what is the most efficient way of querying against a neo4j data base. So I did a Benchmark with quite surprising results.

Just a quick remark, we used a data base consisting of papers and authors extracted from arxiv.org one of the biggest pre print sites available on the web. The data set is available for download and reproduction of the benchmark results at http://blog.related-work.net/data/

The data base as a neo4j file is 2GB (zipped) the schema looks pretty much like that:

Paper1 <--[ref]--> Paper2 | | |[author] |[author] v v Author1 Author2

For the benchmark we where trying to find coauthors which is basically a friend of a friend query following the author relationship (or breadth first search (depth 2))

As we know there are basically 3 ways of communicating with the neo4j Database:

Java Core API

Here you work on the nodes and relationship objects within java. Formulating a query once you have fixed an author node looks pretty much like this.

for (Relationship rel: author.getRelationships(RelationshipTypes.AUTHOROF)){

Node paper = rel.getOtherNode(author);

for (Relationship coAuthorRel: paper.getRelationships(RelationshipTypes.AUTHOROF)){

Node coAuthor = coAuthorRel.getOtherNode(paper);

if (coAuthor.getId()==author.getId())continue;

resCnt++;

}

}

We see that the code can easily look very confusing (if queries are getting more complicated). On the other hand one can easy combine several similar traversals into one big query making readability worse but increasing performance.

Traverser Framework

The Traverser Framework ships with the Java API and I really like the idea of it. I think it is really easy to undestand the meaning of a query and in my opinion it really helps to create a good readability of the code.

Traversal t = new Traversal();

for (Path p:t.description().breadthFirst().

relationships(RelationshipTypes.AUTHOROF).evaluator(Evaluators.atDepth(2)).

uniqueness(Uniqueness.NONE).traverse(author)){

Node coAuthor = p.endNode();

resCnt++;

}

Especially if you have a lot of similar queries or queries that are refinements of other queries you can save them and extend them using the Traverser Framework. What a cool technique.

Cypher Query Language

And then there is Cypher Query language. An interface pushed a lot by neo4j. If you look at the query you can totally understand why. It is a really beautiful language that is close to SQL (Looking at Stackoverflow it is actually frightening how many people are trying to answer Foaf queries using MySQL) but still emphasizes on the graph like structure.

ExecutionEngine engine = new ExecutionEngine( graphDB );

String query = "START author=node("+author.getId()+

") MATCH author-[:"+RelationshipTypes.AUTHOROF.name()+

"]-()-[:"+RelationshipTypes.AUTHOROF.name()+

"]- coAuthor RETURN coAuthor";

ExecutionResult result = engine.execute( query);

scala.collection.Iterator

while (it.hasNext()){

Node coAuthor = it.next();

resCnt++;

}

I was always wondering about the performance of this Query language. Writing a Query language is a very complex task and the more expressive the language is the harder it is to achieve good performance (same holds true for SPARQL in the semantic web) And lets just point out Cypher is quite expressive.

What where the results?

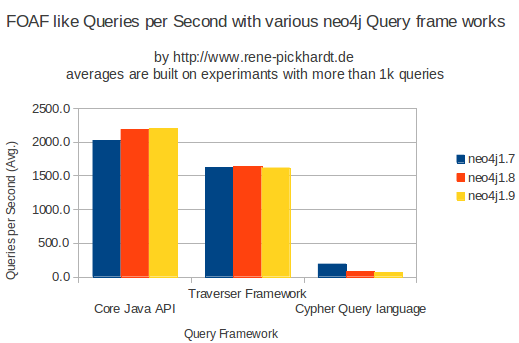

- The Core API is able to answer about 2000 friend of a friend queries (I have to admit on a very sparse network).

- The Traverser framework is about 25% slower than the Core API

- Worst is cypher which is slower at least one order of magnitude only able to answer about 100 FOAF like queries per second.

I was shocked so I talked with Andres Taylor from neo4j who is mainly working for cypher. He asked my which neo4j version I used and I said it was 1.7. He told me I should check out 1.9. since Cypher has become more performant. So I run the benchmarks over neo4j 1.8 and neo4j 1.9 unfortunately Cypher became slower in newer neo4j releases.

Quotes from Andres Taylor:

Cypher is just over a year old. Since we are very constrained on developers, we have had to be very picky about what we work on the focus in this first phase has been to explore the language, and learn about how our users use the query language, and to expand the feature set to a reasonable level

I believe that Cypher is our future API. I know you can very easily outperform Cypher by handwriting queries. like every language ever created, in the beginning you can always do better than the compiler by writing by hand but eventually,the compiler catches up

Conclusion:

So far I was only using the Java Core API working with neo4j and I will continue to do so.

If you are in a high speed scenario (I believe every web application is one) you should really think about switching to the neo4j Java core API for writing your queries. It might not be as nice looking as Cypher or the traverser Framework but the gain in speed pays off.

Also I personally like the amount of control that you have when traversing over the core yourself.

Adittionally I will soon post an article why scripting languages like PHP, Python ore Ruby aren’t suitable for building web Applications anyway. So changing to the core API makes even sense for several reasons.

The complete source code of the benchmark can be found at https://github.com/renepickhardt/related-work.net/blob/master/RelatedWork/src/net/relatedwork/server/neo4jHelper/benchmarks/FriendOfAFriendQueryBenchmark.java (commit: 0d73a2e6fc41177f3249f773f7e96278c1b56610)

The detailed results can be found in this spreadsheet.